While almost every product organization wants its products to be AI-powered or purely AI-based, AI governance is becoming a key focus across organizations, especially for AI-based product companies, and it is undoubtedly a justified focus.

Why AI needs to be responsible?

AI is like an automatic car, which we don't have 100% control. We have lost the leash unless you ensure you know how our AI-powered product impacts users and the non-users around the users.

We have already seen unsavoury incidents where "Artificial Intelligence" has turned into "Genuine Stupidity."

Over the last few years, in some cases, the wrong use of AI & insufficient datasets blurred the line between "innovation independence" and the "free will" of those using it.

Below such cases are when (Not referring direct mention in all cases)

- Self-driving cars killed pedestrians (https://www.ctlawsc.com/autonomous-and-self-driving-car-accidents/).

- Certain facial & physical features marked as terrorism prone, through facial recognition app.

- Some employers assigned productivity score to employees by guessing age, facial feature, & Physical features, biologically assign or identified Sex etc.

- Some recruitment firms rejecting prospective job applicants without assessing their capabilities, based on their age, sex & physical features.

Above are few of the scenarios that “so called” innovation robs one of opportunities without being assessed or without a direct association with their performance, even robbed humans of their lives.

This is when Artificial “Things” become risk to “Human” lives, just like we saw in the 2004 movie. “I, Robot” starred by Mr. Will Smith.

As we see, the safety line between innovation & disaster can only be strengthened by governance around how AI products are built. Every product team needs to align with these if they are using, building, or selling AI products.

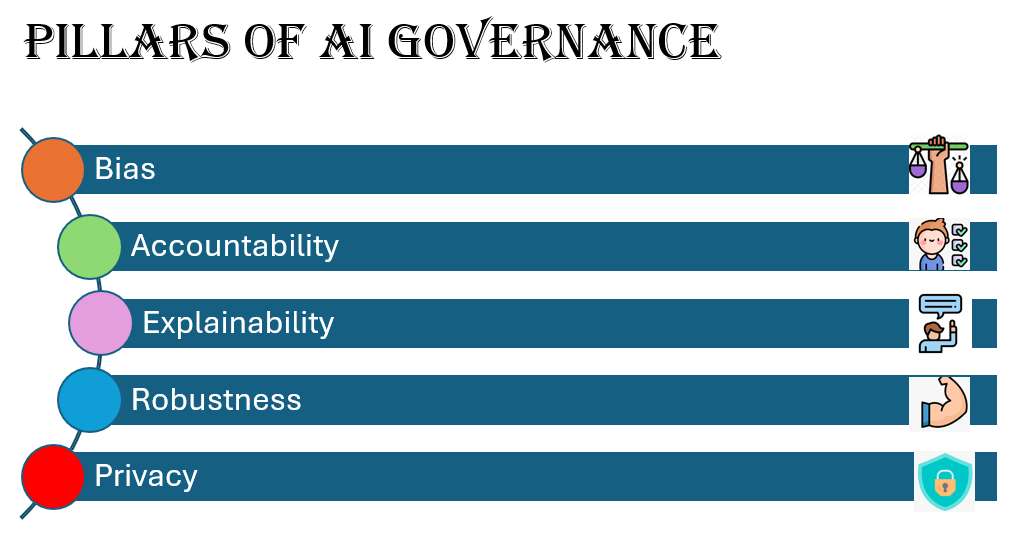

Pillars of AI governance:

Broadly, we see five pillars that need to be ironed out for any AI product builders & owners.Let’s take a shallow yet sufficient dive into these areas.

Bias:

Bias is inherent in our (human species) feelings, thoughts, actions, and beliefs, which are imbibed through our upbringing, acquired learning, perception-forming experiences, and day-to-day interactions.

Bias can be individually built or induced by a collective thought process. It can be conscious or unconscious. Let’s explore these a bit further with examples in context with AI and how it can impact a product.

Selective Bias:

How an AI application behaves is decided by the Machine Learning algorithm (Classic ML, Deep learning, or neural network-based) backing the decision-making process. The selection of the dataset for the data defines how the model will be trained.

Much like how you raise your children, they will learn based on external information along with the information you select for the child. You model your child’s thought process with a range of props & stories. The more diverse information you feed, the more comprehensive your child’s thinking range becomes & decision-making becomes more intelligent.

Similarly, the more diverse the dataset with stratified sampling (Not going into statistical jargon), the better the AI product works. The datasets need to represent all possible categories of the influencing factors.

Below are some explanations with examples.

Some languages classically are gender (Male & Female) & pronoun (Male & Female) sensitive, i.e., English language narrative allows actor /speaker (Noun) identification/ “he” or “she” or “they” mentioned. However, some languages do not have that differentiation of nouns based on pronouns or verbs (For example, Bengali).

An NLP (Deep learning technique for Language Processing) based translation app tries to convert a Bengali phrase into English.

It might not realize it is about a male or female unless it has performed extensive contextual learning with diverse data using Bengali.

In some cases (Real), AI apps identified individuals with a tendency to repeat crime using facial features and ethnicity. (Mostly, people with colour) The judicial system ended up using it and impacted innocent peoples’ lives (we remember the movie Minority Report Starring Tom Cruise.)

Similarly, if we build an AI-powered recruitment app that rejects profession-fit employees or loans using a “delinquency checker app” based on ethnicity, it can crush many dreams before even offering a chance to blossom.

Product teams need to ensure wide representation of all possible categories for build their product & training their models to ensure almost zero selection bias.

Collective Bias:

Collective bias occurs in AI products when the product is built without keeping the entire product user segment. Some user representation is dropped. A group-thinking yet siloed or incomplete thought process occurs while selecting product features and training the models.

For example, during the initial days of the YouTube app on phones, a specific user group started loading upside-down photos, as no one considered the left-handed users’ group while building the feature.

Product user analysis becomes key while building any product (AI or simple app).

Diverse user representation helps rationalize features.

Say we build an AI app that allows entry to a lavatory based on guests’ appearance; in today’s gender-sensitive society, the app may end up causing havoc by not allowing a non-binary person entry to a lavatory & it’s painful, too.

So sensitive & collective focus toward specific preferences while building an AI product instead of collective bias.

Assumptive or implicit:

Assumption-based bias, or implicit bias, impacts AI products & their behaviour.

Imagine we build an image-processing app that identifies an object. Tagging images are required to train an object identification model.

Many pre-tagged training datasets (Google Open Images V4, Microsoft COCO, etc.) are available for such training, but one can also build one by investing in collecting such images (royalty-free) and tagging all of them.

If we do not use a diverse image set for tagging and prepping during the model training process, ideally, AI would assume that any stripped animal is a zebra.

The below images show a zebra, a cat with stripes, and a coat with stripes. AI apps should be able to differentiate those intelligently instead of assuming every striped element is an animal, Zebra.

Accountability with transparency:

When a Product company is building an AI Product, it’s important to share which algorithm, which Neural network & which dataset (if possible, link to the dataset in public platforms such as Kaggle (without sharing proprietary/Personally identifiable information, of course).

Any company using, building, and selling AI products must be transparent about how a model is being built and how an AI prediction and suggestion output is served.

Clarity & Explainability:

Any company using, building, and selling AI products must be transparent when it comes to explaining how an outcome is arrived at.

For example, if any product company has built an AI-powered app that allows entry into an exam based on an AI-powered decision, it selects based on vague parameters, which causes a deserving candidate to be rejected without a clear reason.

Applicants may challenge the decision, and then the AI decision should be explainable to relevant parties and understood clearly by AI users and builder groups.

Robustness:

Robustness in the context of AI is when an AI model (used in severe applications such as self-driving cars in transport or performing self-driven heart operations in a medical facility) where lives are at stake; it's crucial to validate (and revalidate) how vulnerable the AI application is to external attacks or even modification of the real-life-based data.

For example, in a self-driving car, if the input to the deep learning algorithm (convolutional Neural Network (CNN) that supports unsupervised driving, even if a pixel (One Pixel attack) is modified training & testing method by interfering with the workload, the car may identify something entirely different object. It may Identify an old lady with a Bull Dog as a Clear Sign (joking & exaggerating). Still, it may have disastrous effects regarding life-threatening activities with wrongly AI-powered apps or devices.

Imagine an AI-powered Robocop starting to shoot everybody across the town, if you remember the movie "Robocop" from the 1980s or '90s.

Privacy:

We have so far discussed how data is important for an AI-powered app and how a large and diverse dataset is required for an AI model to have higher accuracy.

It's also important to mention that data collection shouldn't be done in a secretive manner, especially if it belongs to an entity such as a person, business, etc. (unless they have publicly shared the data themselves).

While collecting the data, it's essential to ensure what, why, and how the data is being collected, what purpose it will be used for, and how the "data at rest" and "data in motion" security will be ensured.

As I summarize, AI governance would be a key consideration as we enter the magical world of AI. Failing to do so would keep the full potential of AI unsubstantiated due to multiple fear factors and unintended incidents.

Comments (0)